Human-centric engineering

We connect industry expertise, design, and engineering to create digital products that empower users, deliver business value and make a societal impact

21

delivery centers and 6 design studios

+2,000

digital enthusiasts

75

pts NPS

+25%

revenue growth in 2022

24

years of experience, founded in garage in Helsinki in 1999

4

continents - present in Europe, Asia and the Americas

People are talking

Don’t just take our word for it. Here’s what our clients are saying…

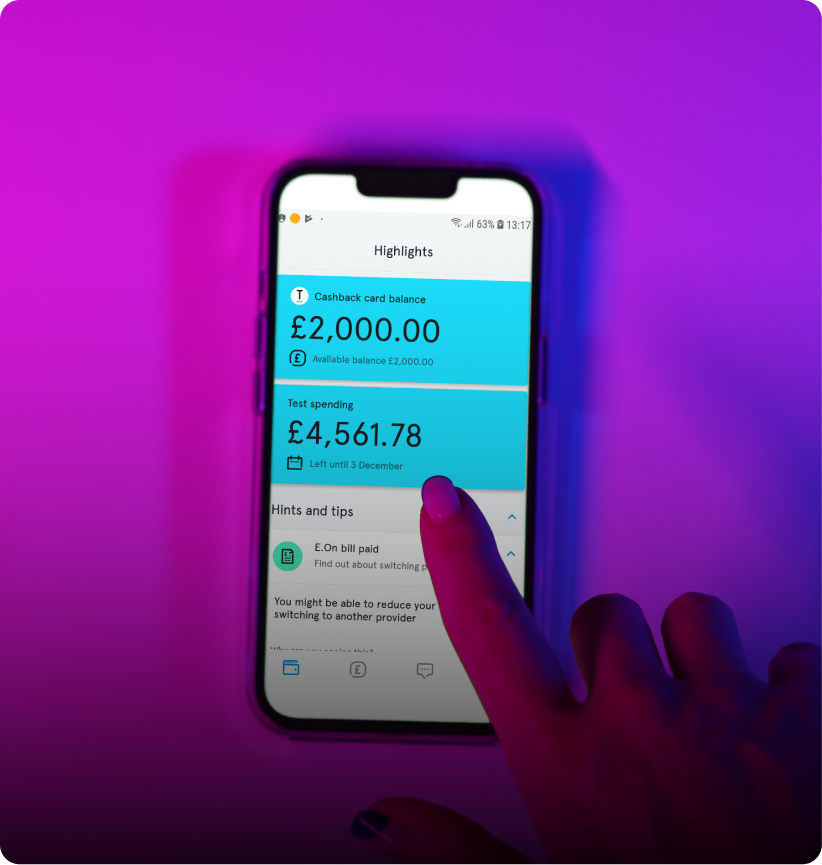

"With the support of the experts of intive’s engineering teams, we have been able to streamline the development of our new app. While the needs of our customers constantly evolve, we will ensure that new exciting features will appear with every major update."

“A new, rebuilt platform gives us the flexibility to develop and distribute tailored products to our customers better and quicker than ever. intive’s technical expertise, proactive attitude and huge domain knowledge were crucial to making this happen.”

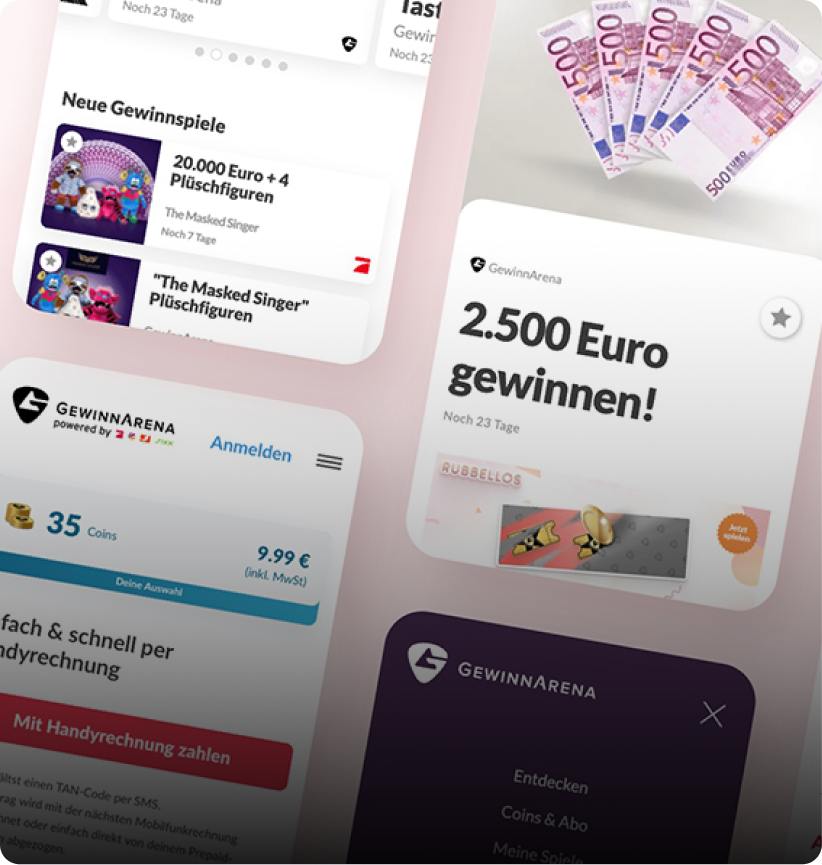

“The refreshed GewinnArena joins all our successful digital offerings. intive’s experts redesigned and rebuilt the application in less than 6 months bringing us a future-oriented functional product that realizes our brand’s multi-channel philosophy through user-centric design.”

“Creating xarvio™ SCOUTING was a huge challenge. At first, we didn’t believe that it would be possible to deliver a fully functional product in such a short time. It’s fair to say that we benefited greatly from our cooperation with intive.”

Explore our perfect mixture of remote and in-office work

A global company

intive transcends borders, with over 2,000 team members and 37 nationalities, spread across 4 continents.